China this week selected to not signal onto a global “blueprint” agreed to by some 60 nations, together with the U.S., that regarded to determine guardrails when using synthetic intelligence (AI) for army use.

Greater than 90 nations attended the Accountable Synthetic Intelligence within the Navy Area (REAIM) summit hosted in South Korea on Monday and Tuesday, although roughly a 3rd of the attendees didn’t help the nonbinding proposal.

AI knowledgeable Arthur Herman, senior fellow and director of the Quantum Alliance Initiative with the Hudson Institute, informed Fox Information Digital that the very fact some 30 nations opted out of this essential growth within the race to develop AI isn’t essentially trigger for concern, although in Beijing’s case it’s probably due to its common opposition to signing multilateral agreements.

Individuals are proven previous to the closing session of the REAIM summit in Seoul, South Korea, on Sept. 10, 2024. (JUNG YEON-JE/AFP by way of Getty Pictures)

MASTERING ‘THE ART OF BRAINWASHING,’ CHINA INTENSIFIES AI CENSORSHIP

“What it boils down to … is China is always wary of any kind of international agreement in which it has not been the architect or involved in creating and organizing how that agreement is going to be shaped and implemented,” he stated. “I think the Chinese see all of these efforts, all of these multilateral endeavors, as ways in which to try and constrain and limit China’s ability to use AI to enhance its military edge.”

Herman defined that the summit, and the blueprint agreed to by some 5 dozen nations, is an try to safeguard the increasing expertise surrounding AI by guaranteeing there’s all the time “human control” over the techniques in place, significantly because it pertains to army and protection issues.

“The algorithms that drive protection techniques and weapons techniques rely so much on how briskly they will go,” he stated. “[They] move quickly to gather information and data that you then can speed back to command and control so they can then make the decision.

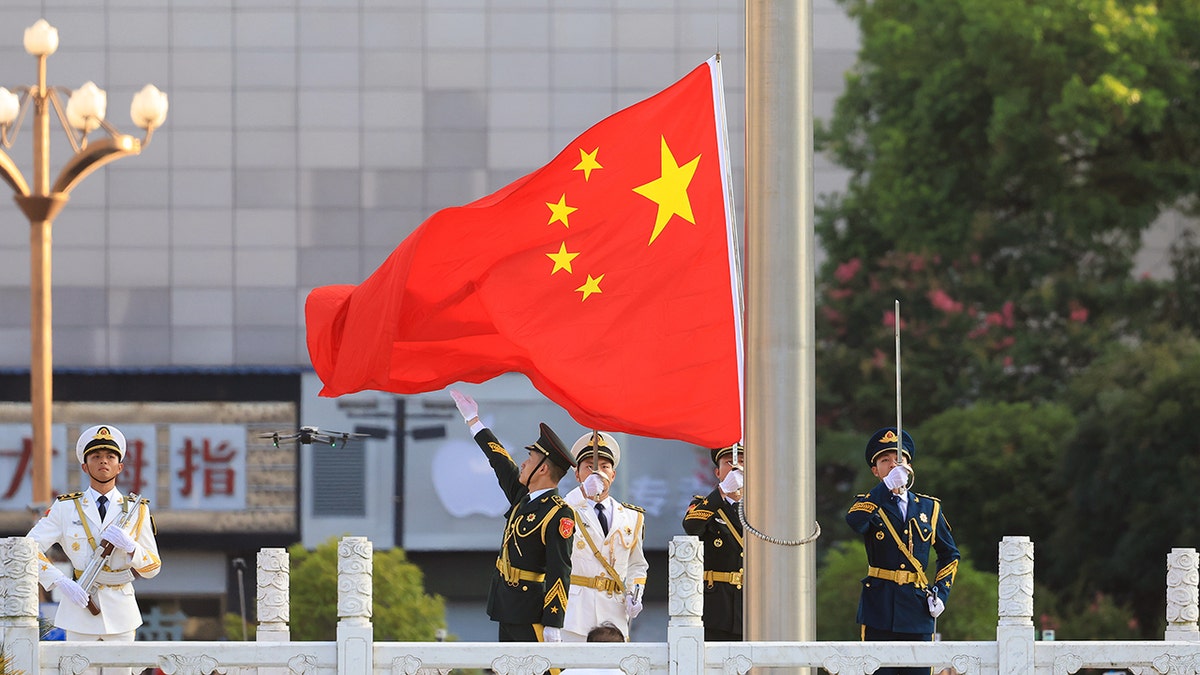

The Guard of Honor of the Chinese People’s Liberation Army performs a flag-raising ceremony at Bayi Square to celebrate the 97th anniversary of China’s Army Day on Aug. 1, 2024, in Nanchang. (Ma Yue/VCG via Getty Images)

“The velocity with which AI strikes … that is massively essential on the battlefield,” he added. “If the choice that the AI-driven system is making includes taking a human life, then you definately need it to be one during which it is a human being that makes the ultimate name a few resolution of that kind.”

Participants are shown with the Tenebris, a medium-size unmanned surface vessel concept, on display at the REAIM summit in Seoul, South Korea, on Sept. 10, 2024. (JUNG YEON-JE/AFP via Getty Images)

Nations leading in AI development, like the U.S., have said maintaining a human element in serious battlefield decisions is hugely important to avoid mistaken casualties and prevent a machine-driven conflict.

ARMY PUSHES 2 NEW STRATEGIES TO SAFEGUARD TROOPS UNDER 500-DAY AI IMPLEMENTATION PLAN

The summit, which was co-hosted by the Netherlands, Singapore, Kenya and the United Kingdom, was the second of its kind after more than 60 nations attended the first meeting last year held in the Dutch capital.

It remains unclear why China, along with some 30 other countries, opted not to agree to the building blocks that look to set up AI safeguards, particularly after Beijing backed a similar “name to motion” during the summit last year.

When pressed for details of the summit during a Wednesday press conference, Chinese Foreign Ministry spokesperson Mao Ning said that upon invitation, China sent a delegation to the summit where it “elaborated on China’s rules of AI governance.”

Mao pointed to the “International Initiative for AI Governance” put forward by Chinese President Xi Jinping in October that she said “provides a systemic view on China’s governance propositions.”

Participants look at a miniature version of the KF-21 fighter jet on display at the REAIM summit in Seoul, South Korea, on Sept. 10, 2024. (JUNG YEON-JE/AFP via Getty Images)

The spokesperson did not say why China did not back the nonbinding blueprint introduced during the REAIM summit this week but added that “China will stay open and constructive in working with different events and ship extra tangibly for humanity by AI growth.”

CLICK HERE TO GET THE FOX NEWS APP

Herman warned that while nations like the U.S. and its allies will look to establish multilateral agreements for safeguarding AI practices in military use, they are unlikely to do much in the way of deterring adversarial nations like China, Russia and Iran from developing malign technologies.

“While you’re speaking about nuclear proliferation or missile expertise, the most effective restraint is deterrence,” the AI expert explained. “You pressure those that are decided to push forward with using AI – even to the purpose of principally utilizing AI as type of [a] automated kill mechanism, as a result of they see it of their curiosity to take action – the best way during which you constrain them is by making it clear, should you develop weapons like that, we will use them in opposition to you in the identical means.

“You don’t count on their sense of altruism or high ethical standards to restrain them, that’s not how that works,” Herman added.

Reuters contributed to this report.